GitOps secrets management with SOPS, Helm-secrets and ArgoCD

Introduction

There are currently no standards on how to manage secrets like credentials, api keys or other sensitive information using a GitOps deployment workflow. So this post will discuss about why we needed to switch from our previous solution to a GitOps approach and the main security issue that we had to fix in order to move forward.

Why the change to GitOps

When we first started looking for ways to secure our AWS infrastructure, we noticed that our EKS API server was wide open to the internet and IAM was the only layer of security the cluster had. So we decided to reduce our footprint we decided to make the cluster private by only allowing a subset of IPs to interact with its API server. All of our Kubernetes workloads were managed with Terraform and to still be able to deploy in our cluster, we needed either to create a bastion and allow only this server to connect to the API, or we could could ditch Terraform and switch to another deployment workflow.

The choice was made towards the second idea as we were encountering lots of hurdles at the time to be able to deploy CRD's to our cluster using Terraform. Plus constantly translating YAML into HCL became a real chore (even with tooling). So we decided to try ArgoCD which looked to us like a nice compromise. We could still deploy ArgoCD and all workloads like the ClusterAutoscaler, the AWS ALB Controller or the EBS CSI Driver with terraform modules as the base layer and delegate all other workloads to ArgoCD.

Security Issues

One of the main issues a team migrating to GitOps is the handling of credentials that could previously be hidden using solutions like AWS Secret Manager in your terraform code. So at first we decided to handle secrets in terraform still, using the kubernetes provider from Hashicorp. But then we had the joyful surprise of not being able to use easily that solution with some Helm charts as some needed credentials directly visible in their configuration files and other kind of deployment behaviours. So we needed a solution to cypher secrets, store them on our repos and let ArgoCD pull and decypher them.

How to make ArgoCD, SOPS and Helm-secrets work together

Pre-requisites

in order to make everything work nicely, we will need the following things:

sopsinstalled on your workstation.- a

KMS symmetric keydeployed in yourAWS Accountand configured so you can manage it and use it using a specificIAM User. ArgoCDdeployed using version2.5.5or newer using the official helm chart at version5.16.14or newer.

Install helm-secrets and sops in ArgoCD

For this part i would actually recommend reading the ArgoCD integration manual in the helm-secrets Github repository as it is well written minus a missing segment on credentials that should also be added to the application-controller and not only the repo-server: https://github.com/jkroepke/helm-secrets/blob/main/docs/ArgoCD Integration.md

But here i will try to explain my installation process.

ArgoCD does not come natively with sops and helm-secrets deployed in its default image, so we will need to do it ourselves, by either customizing the image we deploy or by using the initContainer that we can configure in its helm chart values. In that sense we added the following bloc to our values.yaml:

repoServer:

env:

- name: HELM_PLUGINS

value: /custom-tools/helm-plugins/

- name: HELM_SECRETS_SOPS_PATH

value: /custom-tools/sops

- name: HELM_SECRETS_VALS_PATH

value: /custom-tools/vals

- name: HELM_SECRETS_KUBECTL_PATH

value: /custom-tools/kubectl

- name: HELM_SECRETS_CURL_PATH

value: /custom-tools/curl

# https://github.com/jkroepke/helm-secrets/wiki/Security-in-shared-environments

- name: HELM_SECRETS_VALUES_ALLOW_SYMLINKS

value: "false"

- name: HELM_SECRETS_VALUES_ALLOW_ABSOLUTE_PATH

value: "false"

- name: HELM_SECRETS_VALUES_ALLOW_PATH_TRAVERSAL

value: "false"

# helm secrets wrapper mode installation (optional)

# - name: HELM_SECRETS_HELM_PATH

# value: /usr/local/bin/helm

volumes:

- name: custom-tools

emptyDir: {}

volumeMounts:

- mountPath: /custom-tools

name: custom-tools

# helm secrets wrapper mode installation (optional)

# - mountPath: /usr/local/sbin/helm

# subPath: helm

# name: custom-tools

initContainers:

- name: download-tools

image: alpine:latest

command: [sh, -ec]

env:

- name: HELM_SECRETS_VERSION

value: "3.12.0"

- name: KUBECTL_VERSION

value: "1.24.3"

- name: VALS_VERSION

value: "0.18.0"

- name: SOPS_VERSION

value: "3.7.3"

args:

- |

mkdir -p /custom-tools/helm-plugins

wget -qO- https://github.com/jkroepke/helm-secrets/releases/download/v${HELM_SECRETS_VERSION}/helm-secrets.tar.gz | tar -C /custom-tools/helm-plugins -xzf-;

wget -qO /custom-tools/sops https://github.com/mozilla/sops/releases/download/v${SOPS_VERSION}/sops-v${SOPS_VERSION}.linux

wget -qO /custom-tools/kubectl https://dl.k8s.io/release/v${KUBECTL_VERSION}/bin/linux/amd64/kubectl

wget -qO- https://github.com/variantdev/vals/releases/download/v${VALS_VERSION}/vals_${VALS_VERSION}_linux_amd64.tar.gz | tar -xzf- -C /custom-tools/ vals;

# helm secrets wrapper mode installation (optional)

# RUN printf '#!/usr/bin/env sh\nexec %s secrets "$@"' "${HELM_SECRETS_HELM_PATH}" >"/usr/local/sbin/helm" && chmod +x "/custom-tools/helm"

chmod +x /custom-tools/*

volumeMounts:

- mountPath: /custom-tools

name: custom-toolsConfigure ArgoCD for Helm-secrets

Once this part done, we will need to add the secrets scheme as part of helm-secrets so it can be used by ArgoCD as helm values, this can be done the same way as the previous sops and helm-secrets installation using the Argocd values.yaml file:

configs:

cm:

helm.valuesFileSchemes: >-

secrets+gpg-import, secrets+gpg-import-kubernetes,

secrets+age-import, secrets+age-import-kubernetes,

secrets, secrets+literal,

httpsConfigure ArgoCD to retrieve the AWS KMS symmetric key

The official documentation only talks about configuring the repo-server, however i did find that we also need to give KMS access to the application-controller workload in order to properly retrieve the key while an application synchronise itself with the Git repo.

So we will create one IAM User for ArgoCD and link it with a policy so we can retieve and decypher the secrets sops will generate. In our case we called the user sandbox-safe-argo-sa-kms-key-read and associate the following JSON policy to it:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"kms:GetPublicKey",

"kms:DescribeKey"

],

"Resource": "arn:aws:kms:<region-id>:<your-aws-account-id>:key/<your-key-id>"

}

]

}

Once the key arn adapted to what you have on your side, you will be able to generate the needed credentials and store them into a kubernetes secret which should look like this once created

apiVersion: v1

data:

AWS_ACCESS_KEY_ID: accesskeybase64somewhatlongstring

AWS_DEFAULT_REGION: regionbase64supersmallstring

AWS_SECRET_ACCESS_KEY: Secretaccesskeybase64superlongstring

kind: Secret

metadata:

name: sandbox-safe-argo-kms-key-read

namespace: <whereever-you-deployed-argocd>

type: OpaqueNow we will mount that secret as environment variable inside the repo-server and the application-controller pods using the values.yaml again:

controller:

envFrom:

- secretRef:

name: sandbox-safe-argo-kms-key-read

repoServer:

envFrom:

- secretRef:

name: sandbox-safe-argo-kms-key-read

Configuring ArgoCD and SOPS

We previously installed sops in the ArgoCD pod using an InitContainer and now we should tell how it needs to behave regarding decyphering secrets passed through the Git repo. In the folder of any application deployed using a helm chart style, we have different files and folders:

└── k8s-monitoring

├── templates

│ ├── gatus.yaml

│ ├── grafana-dashboards.yaml

│ ├── kube-prometheus-stack.yaml

│ ├── loki.yaml

│ ├── metrics-server.yaml

│ ├── prometheus-operator-crds.yaml

│ └── promtail.yaml

├── Chart.yaml

├── values.sops.yaml

└── values.yamlUsing this exemple, we will add a .sops.yaml file in the parent folder of our k8s-monitoring folder. The file will help sops understand which KMS key to use and where and will look like this:

---

creation_rules:

- kms: <arn-of-your-key

encrypted-suffix: '_sops_cypher'

On our side, we want to be able to cypher a specific set of keys, but still be able to have a somewhat readable file. So we decided to use the encrypted-suffix option to only decypher (or cypher locally) the values associated to keys ending with the _sops_cypher. There will be an exemple further below.

Test your application

In part we will use the applicaiton Gatus: https://github.com/TwiN/gatus

Cypher your secrets

└── k8s-monitoring

├── templates

│ ├── gatus.yaml

│ ├── grafana-dashboards.yaml

│ ├── kube-prometheus-stack.yaml

│ ├── loki.yaml

│ ├── metrics-server.yaml

│ ├── prometheus-operator-crds.yaml

│ └── promtail.yaml

├── Chart.yaml

├── values.sops.yaml

└── values.yamlTo take an exemple here, we are looking at pretty general monitoring stack managed by a single parent ArgoCD application called k8s monitoring, Each applications is deployed using a child application object that will be rendered according to values present in their respective files (such as gatus.yaml) but also with some the values.yaml file from the k8s-monitoring parent application. Here is what gatus.yaml looks like:

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: gatus

namespace: argocd

labels:

app.kubernetes.io/name: gatus

app.kubernetes.io/managed-by: argocd

finalizers:

- resources-finalizer.argocd.argoproj.io

spec:

project: {{ .Values.project }}

source:

chart: gatus

repoURL: https://avakarev.github.io/gatus-chart

# Auto update minor & patch release

# https://argo-cd.readthedocs.io/en/stable/user-guide/tracking_strategies/#helm

targetRevision: {{ .Values.gatus.target_version }}

helm:

values: |

image:

tag: v5.1.1

pullPolicy: IfNotPresent

serviceMonitor:

enabled: true

config:

metrics: true

endpoints:

###############################################################

## Google

###############################################################

- name: testing google

group: website

url: https://google.com

interval: 5m

conditions:

- '[STATUS] <= 299'

alerts:

- enabled: true

type: pagerduty

description: "healthcheck failed"

send-on-resolved: true

destination:

server: https://kubernetes.default.svc

namespace: {{ .Values.gatus.namespace }}

syncPolicy:

## https://argo-cd.readthedocs.io/en/stable/user-guide/auto_sync

automated:

prune: true

selfHeal: true

syncOptions:

- CreateNamespace=true

From this file we know gatus will be deployed in a project defined in the values.yaml and use a specific version of its helm chart thanks to:

spec.project: {{ .Values.project }}spec.source.targetRevision: {{ .Values.gatus.target_version }}

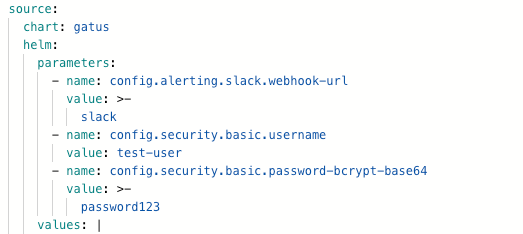

But now we want to add a notification using a slack integration and also have a more secure username/password pair than just the default ones. To achieve that we will add the following key/values to gatus.yaml:

spec:

helm:

parameters:

- name: config.alerting.slack.webhook-url

value: {{ .Values.gatus.slack_webhook_sops_cypher }}

- name: config.security.basic.username

value: {{ .Values.gatus.username_sops_cypher }}

- name: config.security.basic.password-bcrypt-base64

value: {{ .Values.gatus.password_sops_cypher }}

By default, our k8s-monitoring application handles values via the values.yaml file, but if we want to add a credentials or sensitive data, we will need to tell ArgoCD that some cyphered key/value pairs exist in a specific file that need to be decyphered. To that end, we will create a file at the same level of values.yaml called values.sops.yaml. This file will hold the cyphered key/values that we want to store:

gatus:

slack_webhook_sops_cypher: slack

username_sops_cypher: test-user

password_sops_cypher: password123To cypher the keys, we will need to use locally using this command:

sops -e -i values.sops.yamlThe file will now look like:

gatus:

slack_webhook_sops_cypher: ENC[AES256_GCM,data:value,iv:bDZ5ewI2Jqn2nUvq5gj02Fzi78Dnoy6XO4eM3ZEtP5A=,tag:lVYgPj0ZH/R4sFGt39355g==,type:str]

username_sops_cypher: ENC[AES256_GCM,data:BwnewZHdolYC,iv:vTfBXs2cESuoeHvShJ8X/mDrYOjUeIADcdz98Z3jZS0=,tag:FvixuHa3KmNFlZicNPstJg==,type:str]

password_sops_cypher: ENC[AES256_GCM,data:hnI+PyBiNSsh5ZY=,iv:EDNTjRgALW7SOBzT7jkhALCaEdXDM+pLAQg2HMd+F0o=,tag:Sz9qZ/vv6OmwMCxkVfr9Sg==,type:str]

sops:

kms:

- arn: <arn-key>

created_at: "date"

enc: longkey

aws_profile: ""

gcp_kms: []

azure_kv: []

hc_vault: []

age: []

lastmodified: "date"

mac: ENC[AES256_GCM,data:vF752cqiB70UUyiPHy59JCTkTRsA0gSACVnbqAbPXUhUep+icGmcNzfL3TzLxMVyQ5YVOFtydEuSLj3HlXAWUP1W8TSk+uk+rbOPPOIrYd4W4wUyyTo4t7DAyAikWyAkT/bDX/8vpKwvFK4RcdaRcSXV4uT28KxoCTaP+zpX67E=,iv:ysBaiAv+WkFPVNJnEUT5zt7ozj4WUyjahSWUcXrjc1U=,tag:hJ7gaa7k6cANTaPMM+iqkQ==,type:str]

pgp: []

unencrypted_suffix: _unencrypted

version: 3.7.3Tell ArgoCD where are your secrets located

We will now have to let the parent application know where our secrets are in order to decypher and use them to generate the full manifests. Our application before installing sops and helm-secrets looked as follow:

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

labels:

app.kubernetes.io/name: k8s-monitoring-apps

name: k8s-monitoring-apps

namespace: argocd

spec:

destination:

namespace: argocd

server: https://kubernetes.default.svc

project: k8s-monitoring

source:

path: k8s-monitoring

repoURL: https://github.com/my-org/my-sandbox-repo

targetRevision: HEAD

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- CreateNamespace=true

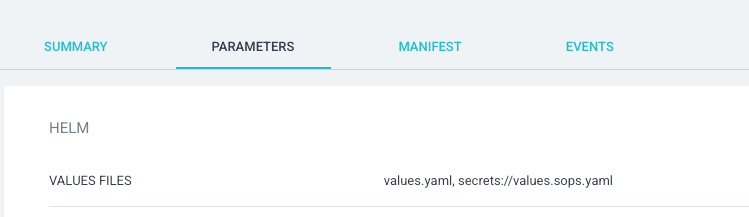

But now we do want to add sensitive data to our child gatus application, so we will need to define more specifically where the k8s-monitoring app gets its values by adding the following key/values:

spec:

source:

helm:

valueFiles:

- values.yaml

- secrets://values.sops.yaml

This will let ArgoCD know:

- it must use the

values.yamlto get part of its values - It must use

sopsto decypher thevalues.sops.yamlfile to get the rest of its values before applying the generated manifest.

Test Results

After the applications synchronises, you will be able to see both values files appear in the parent k8s-monitoring :

We can now check what the child gatus app looks like: